Proxmox¶

Installing Proxmox VE¶

After installing the server, the installation of Proxmox VE service will take within 15-20 minutes. You will receive a notification about the installed server and a link in the form https://ip-address:8006 to the email associated with the account. You will need to go to access the Proxmox VE management web interface thought the link provided in the email:

- Login -

root; - Password - system password.

After following the link, a window for entering a login and password will open:

The main window will be opened after entering the login and password, in which all settings are made:

Configuring the Network interfaces¶

After installing the system, the network interface configuration looks like the picture below. One physical interface is configured, an IP address is registered. You should choose the required server and go to the Network section:

Network settings on the Proxmox VE server:

You should go to the enp1s0f1 physical interface settings (firstly, click on the name of the network interface, then on the Edit button), copy the IP address, delete it and click OK button:

Add a Linux Bridge that will work through the enp1s0f1 physical interface, below are the steps to add:

Enter the IP address that was previously copied, specify the physical interface (enp1sf01 - in our example). Be sure to specify the port’s name, otherwise you will need to connect via ILO and configure the configuration file via SSH. Then, click OK button:

As a result, the network settings should look like this:

After making sure that everything is configured correctly, click on the Apply Configuration button.

The disk subsystem configuration¶

Single disk servers can host both the Proxmox VE system itself and virtual servers, ISO images, container templates, operating system templates, etc.

Note

In our example, a server with two disks is installed, the system is installed on the first disk and will be placed: ISO images, as well as Backup of virtual servers. On the second disk, we will configure the hosting of virtual servers. These settings are shown as an example - if one of the disks fails, either the operating system will stop working, or the virtual servers will be lost. For example: combine 2 disks into RAID1 and install the system, combine 2 disks into RAID1 and use it to install virtual servers (the size of the disks depends on the infrastructure which is planned to be hosted on this server).

Adding a second disk¶

Go to the server via SSH and check that the disk is present and not in use by the system. You can do this by using the command lsblk. The picture below shows that the system is installed on the sda disk (sda1 / sda2), the sdb partition is free, its size is 223GB:

After you have made sure that the disk is there and it is not busy, you should go to the Proxmox VE web interface:

Choose a server (in our example - 2667) and go to the Disks >> LVM section:

Click on the Create: Volume Group button:

A window will be opened in which the system will offer to create a partition on a free disk, in our case it is sdb:

In the same window (in the Add Storage section) you must enter the name of the section. In our example, the name vms is used, because hosting of virtual servers is planned. Then click Create:

As a result, the disks should be shown in the main window:

As you can see, the vms section has been added..

After the second disk is added and what will be placed there is defined, it is necessary to reconfigure the system so that all virtual servers are automatically placed on the second disk. To do this, click on Datacenter on the left side and choose Storage:

Virtual servers can be stored on the system disk - sda, as well as on a new disk - sdb:

We have two disks, and we decided that only ISO images and Backup will be stored on the system disk but virtual servers, system images, templates, containers will be placed on the second disk - sdb. You need to make settings, choose the required disk (local / vms) and click on the Edit button at the top of the screen.

Select disk local:

Click on the Content drop-down list, all items are selected by default, you need to click on them again to disable. You should leave only ISO image and VZDump backup file and click OK to save the settings:

After the settings have been made, the following result should be obtained:

In the Local disk settings, only the ISO image and VZDump backup parameters are set.

To do the same actions with the vms disk: Edit >> choose two items Disk image and Container to host virtual servers, and click OK to save:

To install a virtual machine, you must use an ISO image. It must somehow be uploaded to Proxmox. This is not difficult to do, but remember that you can only upload an image to a storage that supports this feature. In our example, the option on the Local drive was chosen. We will load the image of the virtual machine into it. To do this, open the desired storage and select the ISO Images tab, then click the Upload button and choose the necessary:

A window will be opened in which you must specify the path to the ISO image:

An alternative way to download an ISO image in Proxmox is to use scp or download the image directly (via wget). It must be placed in the /var/lib/vz/template/iso directory if the default storage added by default after installation is used. Then you should wait for the download to finish. Now this image can be used to install the OS on a virtual machine.

A virtual machine installation¶

Click on Datacenter in the panel on the left side to go to the main window:

Then, click on the Create VM button in the upper right corner::

In the General section, fill in the VM ID: server’s name (we left it by default 100):

In the OS section, choose the ISO image that was downloaded earlier and also the type and version of the operating system on the right side:

In the System section, you can set the required SCSI Controller, BIOS (Legacy, UEFI) and other parameters:

In the Disks section it is possible to specify: BUS/Device: (IDE, SCSI, SATA...), Storage: point out the location of the virtual server disk on the server's physical disk, Disk size: size in GB:

Indicate the number of cores in the CPU section:

In the Memory section, specify the amount of RAM:

In the Network section, specify the interface (Bridge) through which the virtual server will work (in our example, this is Linux Bridge). On the right side of the dropdown list, you must choose the interface (Model) for the virtual server. For example, Model - e1000 is best suited as compatibility for Windows systems:

After performing these steps, the virtual server should be displayed in the main window (the name 100 is set by default in the first window, it can be changed if necessary):

Next you should right-click on the server’s name and click Start (if the virtual server does not start, you must reboot the entire physical host. Why is it necessary to do? The network and the disk subsystem have been configured, and to apply the settings according to the recommendation, restart of Proxmox server is required):

After starting the virtual server, you need to open a console window - on the right side Console, or also right-click on the server and choose Console in the drop-down list at the very bottom::

A console window will be opened, either alone or on the right side. In our example, we install CentOS 7:

You should click on the link Install CentOS 7 and start installing the system. In the first window, choose the language and click Continue:

Set all the necessary settings in the main window:

Enter the server’s name and click on the Configure button in the Network & HOST NAME section::

In the opened window, go to the IPv4 Settings >> Method tab: select Manual (the IP address will be set manually), enter the IP address, specify the DNS settings, be sure to tick the box Required IPv4 for this connection and click Save.

Then, in the opened window, set the button to the ON status and click the Done to save the settings:

After making changes in the main window, click on the Begin Installation button:

The system will ask you to set a password for the root in the account settings window. You can also create an additional user here. At the same time, packages for the system will be installed:

At the end of the installation, the system will report successful installation and ask you to set a password for the root user, if it has not been set yet. To complete the configuration, click on the Finish configuration button:

After that, the system will ask you to click on the Reboot button to reboot:

As soon as the virtual server reboots, you can access it via SSH, specifying the IP as the root user and the password that was specified during installation in the previous step.

Container creation¶

To create a container, you must download the operating system templates that will be loaded in the container firstly. To get started, make sure that Storage is allowed to store system templates.

Then you should go to Datacenter - on the right side of Storage:

The disks must have the Container template parameter:

If this parameter is not present, you need to choose the necessary disk with Type: Directory and click on the Edit button. In the Content drop-down list, select Container Template and click OK to save:

Then click on the name of the disk on which the Container Template (parameter is set (in our case, the local disk), choose CT Templates on the right side, and go to the window where you can load the required template:

To download templates, click on the Templates button in the template download window:

In the opened window, select the version of the operating system that is required in the container. Click on its name and the Download button:

We downloaded Alpine:

Then right-click on the name of the physical server (in our case it is 26671) and choose Create CT:

In the General section, set the name of the container and specify the root password to enter the container:

Specify the downloaded image in the Template section (in our case, Alpine):

In the Disks section in the Storage window, specify which physical disk the container will be placed on, in the Disk size(GiB) window: the size of the disk in GB. In addition to rootfs, you can add partitions that might be needed for the container to work on the right side. For example, /db is for storing database files:

Specify the number of cores in the CPU section:

In the Memory section, the size of RAM:

In the Network section, specify Bridge, enter the IP address that was purchased for virtual servers, specify the Static parameter and press the Next button:

Enter the address and a domain name in the DNS section if there are any.

For the final check, all settings that have been set are displayed in the Confirm window. If everything is correct, click on the Finish button:

A window with the task execution will be opened. In case of a correct assembly, the status TASK OK should be received:

After the settings have been made in the main window, container 101 should be displayed on the right side:

Right-click on it and press Start. Then choose the Console section and the container's console window will be displayed on the right:

You should enter the root login and the password that was set during configuration and go to the container.

Then you need to check the created disk partitions and network settings:

As you can see, the IP address and mount points are correct. You can check the correctness of the network by pinging the address 8.8.8.8:

The initial setup of the Proxmox server is over.

Testing the Proxmox cluster and configuring Ceph¶

Note

Proxmox replication provides benefits to improve the availability and performance of your infrastructure. It allows you to create copies of virtual machines, as well as transfer them to other servers in case of an accident, i.e., ensure higher availability and performance of the virtualization infrastructure.

Prior configurations and connection to the server¶

Before starting the replication procedure, you need to make a number of settings and connect to the first server:

Step 1. In the control panel, go to the Cluster section and click the Create Cluster button:

Step 2. Enter the name of the cluster and the network (or list of subnets) for the stable operation of the cluster. Then click on the Create button:

Step 3. In the Cluster section, go to the Join information tab and copy the information from the Join information window. This information is needed to connect the second server to the cluster:

Step 4. On the second server, in the Cluster section, go to the Join Cluster tab:

And enter the information for the connection copied in the previous step:

We set the password (root) and the IP address of the server for connection, after we click on the Join-cluster name button:

Step 5. Wait for the connection to the cluster:

Creating a ZFS partition to set up replication of virtual server¶

There are two disks installed in our server: the system is installed on the first one, the second one is intended to host virtual servers that will be replicated to a similar physical server. You need to go to the physical server via SSH and enter the lsblk command. The example below shows that we have an unpartitioned disk /sdb. We will use it to host servers and set up replication:

In the main window of servers’ management, choose a physical server and create a ZFS partition:

In the opening window you must enter the section name in the Name line. The Create:ZFS menu is divided into several functional blocks: you can configure RAID on the right side, and below, choose the disks to be combined into a RAID group.

It is important to pay attention to the message: Note: ZFS is not compatible with disks backed by a hardware RAID controller. According to the recommendation (a link to the documentation will be displayed on the screen), disks for ZFS should be presented to the system bypassing the hardware RAID controller. After completing the settings, you need to complete adding the disk - click the Create button:

As a result, the disk subsystem is configured on the physical servers:

After completing the above settings, you can proceed to install the virtual server. To do this, you can use this instructions.

Configuration of replication¶

In the main window of servers’ management, you need to choose a virtual server that you want to put on replication. In our case it is located on the physical server prox1, the name of the virtual server is 100 (CentOS 7).

Step 1. Go to the Replication section and click on the Add button:

Step 2. In the opened window specify the physical server which replication will be performed to, as well as replications:

The result of successful configuration of replication can be seen by selecting the necessary physical server and clicking on the Replication button. On the right side, you will see the servers set for replication, the replication time, and the current status.

Replication operation¶

Configuration of a cluster and replication from two servers without a shared disk is good only if there are no failures, and at the time of the planned work, you can simply switch the virtual server by right-clicking on its name and selecting Migrate item. In the event of failure of one of the physical servers, the switch-over will not occur when there are only two of them. You will have to restore the virtual server manually. Therefore, we have given an example of a more stable and reliable solution as a cluster of three servers below. Adding a third server is occurred according to the described steps of adding a physical server to the cluster.

Disc information:

- data_zfs - a zfs partition is created on each server to configure replication of virtual servers;

- local - the system is installed;

- pbs - Proxmox Backup Server;

- rbd - Ceph distributed file system.

Network setup information:

- Public network - intended to manage Proxmox servers, also required for the operation of virtual servers;

- Cluster Network - serves to synchronize data between servers, as well as the migration of virtual servers in the event of a physical server failure (the network interface must be at least 10G).

An example of setup in a test environment:

After deploying a cluster of three servers, you can configure replication on 2 servers. Thus, three physical servers will participate in the migration. In our case, replication is configured for prox2 and prox3 servers:

To enable the HA mode (high availability, virtual server migration) on virtual servers, you need to click on Datacenter, choose the HA item on the right side and click on the Add button:

In the opened window, in the VM drop-down list, select all the servers on which you want to enable HA and click the Add button:

An example of successful addition:

We can also check that the HA mode is enabled on the virtual server itself. To do this, you need to click on the necessary virtual server (in our case, CentOS7) and choose the Summary section on the right side.

In our example, the HA mode is up and running, you can disable the first server and check the VM migration to one of the available physical servers:

Testing HA operation in case of server failure¶

After the virtual server replication is correctly configured, we can test the operation of the cluster. In our case, we disabled all network ports on the physical server prox1. Sometime later (4 minutes in our case), the CentOS 7 virtual server switches over and it can be accessed over the network.

An example of checking the switching result and server availability:

As a result of this step, a cluster of three servers was configured, internal disks with ZFS and the HA option on the virtual server.

Switching a virtual server from one physical to another¶

To switch a virtual server from one physical server to another, choose the required server and right-click on it. In the opened menu, select the Migrate item:

After clicking on the Migrate button, a window will open in which you can select a server to which we plan to migrate our virtual server. Choose the server and click Migrate:

The status of the migration can be tracked in the Task window:

After the migration is successfully completed, the message TASK OK will be displayed in the Task window:

We will also see that our virtual server was moved to the prox3 server:

Testing Ceph Operation¶

Attention

We set up Ceph as a test and it wasn't used in a production environment.

It is very easy to find a lot of Ceph installation materials on Proxmox on the Internet, so we will briefly describe only the installation steps. To install Ceph, you need to choose one of the physical servers, select the Ceph section and click on the Install Ceph button:

The Ceph version is set by default. You need to click the Start nautilus installation button. The system will ask you to specify the network settings after installation. In our case, the configuration was carried out from the first server and its IP addresses are indicated as an example:

Click the Next and Finish buttons to complete the installation. The configuration is performed only on the first server, and it will be transferred to the other two servers automatically by the system.

Then you need to configure

- Monitor - the role of the coordinator, the exchange of information between servers. It is advisable to create an odd number to avoid the situation (split-brain). Monitors work in a quorum: if more than half of the monitors fall, the cluster will be blocked to prevent data inconsistency;

- OSD - is a storage unit (usually a disk) that stores data and processes clients’ requests by exchanging data with other OSDs. Generally, each OSD has a separate OSD daemon that can run on any machine that has that disk installed;

- Pool - a pool that combines OSD. It will be used to store virtual disks of servers.

Then you need to add servers with the Monitor and Manager. roles. To do this, click on the name of the physical server, go to the Monitor section, the Create item, and choose the servers united in the cluster. Add them one by one:

Similar actions must be performed from servers with the Manager role. More than one server with this role is required for the correct operation of the cluster.

Adding OSD disks is similar:

According to the recommendation (a link to the documentation will be displayed on the screen), disks should be presented to the system bypassing the hardware RAID controller. Using a hardware RAID controller can negatively affect the stability and performance of the Ceph implementation.

An example of a configured Ceph (/dev/sdc disk is on all three servers):

The last step of Ceph configuration is to create a pool, which will be specified later while creating virtual servers. To do this, click on the name of the physical server, go to the Pools section, and make the following settings:

Description of used parameters' meanings:

- If

Size=3andMin.Size=2, then everything will be fine as long as two of the three OSD placement groups work. If one OSD remains, the cluster will freeze the operations of this group until at least one more OSD “comes to life”. - If

Size=Min.Size, then the placement group will be blocked when any OSD included in it crashes. Due to the high level of “smeared” data, most crashes of at least one OSD will end by freezing the entire or almost the entire cluster. Therefore, the Size parameter must be always at least one point greater than the Min_Size parameter. - If

Size=1, the cluster will work, but the failure of any OSD will mean permanent data loss. Ceph allows you to set this parameter to one, but even if the administrator does this for a specific purpose and for a short time, he takes responsibility for possible problems. - The Pool (rbd) we created above is used while creating the virtual machine. In the Disks section, it will contain the disks of our virtual servers:

Testing the Proxmox Backup server¶

Prior configurations¶

Note

Proxmox Backup Server 2.3-1 was installed from an ISO file downloaded from the official website. Installation is intuitive and it takes a few clicks, so we didn't describe the steps.

To work with Proxmox Backup Server after installing it, you need to open the link https://IP address that was specified at the time of installation:8007/.

You can configure the server according to the needs of the user in the main window.

Below are the main points that, during testing, seemed interesting to us. To store information, you need to add disks. Go to the server via SSH and enter the lsblk command.

In our case, an unpartitioned disk is sdb. We will use it to store backups:

We return to the web interface of the server again and go to the Storage / Disks >> ZFS section:

In the opened window you need to:

- Enter the name of the repository;

- Choose RAID if there are several disks;

- Choose disks for storage;

- Click

OKto apply the settings.

The execution result:

Creation of users¶

To delineate the access rights and connect a backup system on Proxmox servers, you need to create users who can manage the system.

Step 1. Go to the Access Control menu and click the Add button:

Step 2. Enter the username and password (twice), then click the Add button:

Then you need to create a Namespace - the space for storing information. To do this, go to the Data section:

Then click the Add NS button on the right side:

And create space for each user that was created earlier:

Creation result:

Assignment of access rights¶

Step 1. To assign access rights, go to the Data section:

Step 2. Go to the Permissions subsection and click on the Add button. In the opened window specify the user and Namespace:

Step 3. Choose a role from the drop-down list:

An example of setting up access for the user1 and user2:

Adding a Proxmox Backup server to Proxmox servers is done according to the created access rights:

Fill in all fields, below is an example:

Fingerprint is copied to the Proxmox Backup server – Dashboard:

After clicking on the Add button, the created section will be appeared in the main window for managing Proxmox servers:

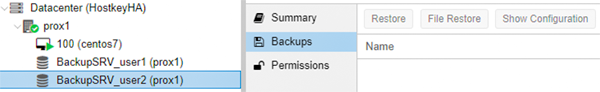

If you go to Storage >> BackupSRV_user2, on the right side, we will see that there are no servers’ backups that were made under other users:

Example for User2:

An example of what was done earlier for the User1:

You can view and manage backups in the Backup section. Here you can add a server to backup and view the execution statuses:

After clicking on the Add button, in the opened window you can choose: - the servers that you want to put on Backup, - the backup time, - the backup mode, and specify the Storage on which the backups will be located:

In the Retention tab, you can specify the number of copies kept:

Restoring a server from a backup¶

In order to restore the server, you need to switch to the Proxmox Backup Server disk that was connected earlier. Then choose the virtual server to be restored and click on the Restore button:

When restoring the server, you must specify Storage - the partition to which it is possible to restore, and Name - the name of the restored server, as well as server’s parameters:

It is possible to restore individual files. You must also choose a server and click on the ‘File Restore’ button. Below is an example of the file system structure and files':